Faculty of Science Doctoral Training Centre in Artificial Intelligence

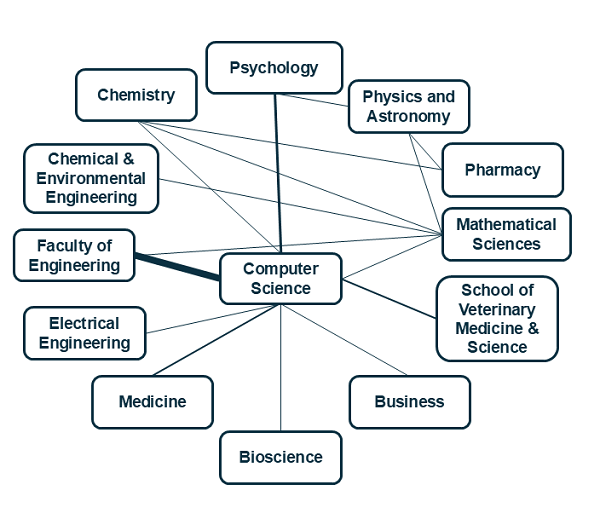

The Faculty of Science AI DTC is a new initiative by the University of Nottingham to train future researchers and leaders to address the most pressing challenges of the 21st Century through foundational and applied AI research on a cohort basis. The training and supervision will be delivered by a team of outstanding scholars from different disciplines cutting across Arts, Engineering, Medicine and Health Sciences, Science and Social Sciences.

The Faculty of Science will invite applications from Home students for fully-funded PhD studentships to carry out multidisciplinary research in the world-transforming field of artificial intelligence. The PhD students will have the opportunity to:

- Choose from a wide choice of AI-related multidisciplinary research projects available, working with world-class academic experts in their fields;

- Benefit from a fully-funded PhD with an attractive annual tax-free stipend;

- Join a multidisciplinary cohort to benefit from peer-to-peer learning and transferable skills development.

Studentship information

| Entry requirements |

Minimum of a 2:1 bachelor's degree in a relevant discipline to the research topic (please consult with the potential supervisors), and a strong enthusiasm for artificial intelligence research. Studentships are open to home students only |

| Start date |

1st October 2025 |

| Funding |

Annual tax-free stipend based on the UKRI rate (currently £20,780), Home tuition fee, and £3000 p.a. Research Training Support Grant. |

| Duration |

3.5 years (42 Months) |

The deadline to have completed and submitted your application to NottinghamHub is Monday 5th May 2025.

For information on how to apply click here

Rooted in the exceptional research environments of our Schools/Faculties at the University of Nottingham, the fourth cohort of the AI DTC will be organised around 23 multidisciplinary research topics. It is important that you identify a research topic aligned with the expected skill set, your background and particular areas of interest. You will need to obtain support from the supervisors associated with your research topic choice before submitting your official application. You can do this by exploring the research projects below and contacting the main supervisor of the project that is of interest to you, directly, to discuss the further details and to arrange an interview as appropriate. In your PhD studentship application, you will be asked to provide your CV, and a personal statement including a research/project topic from the following list and explaining why you are interested in that research/project topic and your motivation for doing a PhD, and the names of the supervisors you have support from. We encourage applicants to complete the personal statement in their own words based on their background and experience. Please follow the instructions above on how to apply.

Adaptive Haptic Skill Transfer for Human-Robot Collaboration in Nuclear Teleoperation (Computer Science, Mathematical Sciences)

Operating robots in nuclear environments presents unique challenges due to safety risks, complex manipulation tasks, and the need for precision. Effective operator training and autonomous robotic assistance are crucial to ensuring safe and efficient teleoperation in these extreme conditions.

This PhD project addresses two intertwined goals:

1. Improving Human Training: Developing adaptive haptic guidance strategies that help operators refine their skills through real-time feedback and tailored trajectory guidance.

2. Enhancing Robot Autonomy: Enabling robots to improve their own performance by learning from operator trajectory data, ultimately enhancing their ability to assist in complex tasks.

The project will explore the integration of learning from demonstration techniques with manifold-based statistical methods to analyse trajectory data collected from expert and novice operators. In this PhD project, you'll harness the power of data-driven techniques to uncover the underlying structure of movement data. Through custom discriminant analysis, you'll identify trainee operator’s unique learning curve, enabling precise quantification of skill, tracking learning progress across successive attempts, and pinpointing areas where trainees can benefit from guidance to enhance their abilities. Looking ahead, the metrics derived from our manifold-valued approach will enable us to customize the robotic autonomy through the paradigm of learning from demonstration, concentrating on pivotal stages within procedures, and determining the most effective curricula for accelerating learning.

By generating data tailored for a nuclear teleoperation application, the candidate will:

• Design adaptive haptic guidance methods that adjust to the operator's skill level, providing personalized feedback to accelerate learning.

• Identify key trajectory features that characterize expert performance and use these insights to train robots to emulate skilled behavior.

• Develop AI-driven algorithms that allow robots to refine their control strategies by learning from observed human behavior patterns.

The project will benefit from collaboration with a key industry partner in the nuclear domain, providing insights from real nuclear operators and potential secondments for access to robot platforms (e.g. Mascot, Dexter, Telbot) and advanced haptic interfaces for data collection and testing. An industry supervisor will also provide mentorship and guidance throughout the project.

We are looking for a motivated student with strong programming skills (C++/Python), analytical thinking, and an interest in human-robot interaction. Knowledge of statistics, robotics, or machine learning is advantageous.

Supervisors: Dr Ayse Kucukyilmaz (School of Computer Science), Prof. Simon Preston (School of Mathematical Sciences).

For further details and to arrange an interview please contact Dr Ayse Kucukyilmaz (School of Computer Science).

AI for additive manufacture of complex flow devices (Engineering, Computer Science)

AI based generative methods can allow us to generate designs of structures with optimal or new functionality. These structures are often complex, which means it is difficult to manufacture them, but with additive manufacturing and its design freedoms such constraints do not apply. Consequently, the combination of AI, numerical methods and additive manufacturing can lead to a new paradigm in functionality, with any device dependent on shape for its function being available for augmentation in behaviour.

Our approach is to use recent developments in generative design, such as AI diffusion models, to identify designs that match the requirements of the user, allowing us to ‘dial up’ or select function and have the design provided to us. The building and training of this model will be via numerical or computational models, validated by experiment, that will allow us to provide training data and indeed, augment that with data over the course of the PhD. As a final part of the jigsaw, additive manufacturing built parts will be used to validate both the numerical models and the AI model.

Our initial focus will be on the automatic generation of mixing devices, commonly used for process intensification and downstream processing in multiple high value manufacturing operations such as pharmaceutical and chemical synthesis, but a general methodology is sought that can be applied across multiple applications.

This project will in part be supported by a Programme Grant hosted by the University of Nottingham ‘Dialling up performance for on demand management’, with over 10 industrial partners supporting the projects. The studentship will have access to resources (physical, chemical and financial) to support the research, and also access to an exchange fund that enables extended research visits to collaborative institutions, including UC Berkeley, ETH Zurich and CSIRO.

Supervisors: Prof. Ricky Wildman (Faculty of Engineering), Prof Ender Ozcan (School of Computer Science), Dr Mirco Magnini (Faculty of Engineering).

For further details and to arrange an interview please contact Prof. Ricky Wildman (Faculty of Engineering).

AI for Digital-Twin Technology to Accelerate Development of Hydrogen Fuel-Cell Powered Aircraft (Engineering, Computer Science)

The development of real-time digital twins for physical systems has many potential benefits: reducing development time and costs by allowing investigation without having physical models; utilising real-time feeds from flying aircraft to highlighting problems or deviations from expectations; improving the predictions of real aircraft performance. More accurate digital twins provide increasing benefits; however, the development of increasingly accurate digital twins is becoming increasingly infeasible for human expertise alone.

This innovative project will look at the use of a variety of Artificial Intelligence techniques, including machine learning, optimisation techniques and automated synthesis of models and solutions, for the development of digital twins to accelerate the development of Hydrogen Fuel-cell powered aircraft.

Within this project, the student will be reviewing the existing technologies for digital twins and their application, along with the current AI techniques that will be considered. They will develop skills with using real-time simulation platforms, such as Typhoon and SpeedGoat. They will be developing, tuning and evaluating potential digital twin models for a variety of components, utilising a variety of AI learning and optimisation techniques, including propulsion motors, power converters, fuel cell and batteries. These models will then be used to identify viable solutions for the aircraft.

Candidates should have a good degree in electrical engineering, aerospace engineering or computer science, with a strong interest in working in this cross-disciplinary area, with a particular interest in the development and application of AI and of electrical power systems.

Supervisors: Dr Jason Atkin (School of Computer Science), Prof. Tao Yang (Faculty of Engineering).

For further details and to arrange an interview please contact Prof. Tao Yang (Faculty of Engineering).

AI-based process control in composites manufacturing (Mathematical Sciences, Engineering)

Applications are invited for a PhD studentship to conduct interdisciplinary research in the rapidly evolving and exciting area of AI for manufacturing of fibre-reinforced polymer composites. A student working on this project will develop and test novel AI algorithms for control of composites manufacturing processes. The project will include developing effective surrogate models (e.g., via physics-informed neural operators) and active learning of the underlying physical processes. The algorithms will be used for on-the-fly estimation of properties of composites parts and for real-time control to produce parts according to customised specification and avoiding defects (and hence reducing both C02 footprint and cost of production). The project will include working with real data from experiments conducted in our Composites Research lab at the Faculty of Engineering as well as from a range of our industrial partners.

Supervisors: Prof Michael Tretyakov (School of Mathematical Sciences), Dr Andreas Endruweit (Faculty Of Engineering), Dr Mikhail Matveev (Faculty Of Engineering), Dr Marco Iglesias (School of Mathematical Sciences).

For further details and to arrange an interview please contact Prof Michael Tretyakov (School of Mathematical Sciences).

AI-Driven Asset Management Modelling for Offshore Wind Turbines (Engineering, Computer Science)

The offshore wind energy sector plays a crucial role in achieving global net-zero targets. However, the operation, inspection, and maintenance of offshore wind turbines (OWTs) remain significant challenges to industry. As wind farms expand into deeper waters and more remote locations, the complexity of managing system operation and maintenance increases. Ensuring the optimal performance and maintenance of critical components is vital as their deterioration and failure can lead to prolonged downtime and significant cost escalations, directly impacting the levelized cost of energy. Asset managers must navigate the challenges of long-term planning while balancing operational needs with financial constraints. With evolving technology and industry practices, continuous reassessment and refinement of operational and maintenance strategies are necessary to ensure performance efficiency and process sustainability.

The aim of this project is to develop a predictive maintenance system for offshore wind farms. This system will integrate newly developed AI-based models that predict failure times and probabilities of critical wind turbine components. It will consist of models for operation, inspection and maintenance, along with the capabilities of condition monitoring systems, to predict key performance metrics and to evaluate different failure prevention and mitigation strategies. An AI-based optimisation algorithm will consider cost, availability, energy efficiency, sustainability and crew safety to determine the optimal maintenance and inspection strategies, and reinforcement learning techniques will be used to continuously adapt these strategies in response to evolving operational conditions.

This project provides an excellent opportunity for students to dig into the realm of wind energy from a holistic perspective. The proposed development of AI-based asset management modelling techniques will require a close interaction with engineers and computer scientists and will offer a potential for opportunities to collaborate with industry partners in the wind energy sector, gaining valuable practical experience and increasing potential impact of research outcomes.

Supervisors: Dr Rasa Remenyte-Prescott (Faculty Of Engineering), Dr Rundong Yan (Faculty Of Engineering), Dr Lin Wang (Faculty Of Engineering), Prof Dario Landa Silva (School Of Computer Science), Dr Daniel Karapetyan (School Of Computer Science).

For further details and to arrange an interview please contact Dr Rasa Remenyte-Prescott (Faculty Of Engineering).

AI-Driven Modelling of Peatland Evolution for Land Surface Models (Mathematical Sciences, Chemical and Environmental Engineering)

Peat (water-saturated organic soil) contains 30% of soil carbon in 3% of the global land area. It provides a wide range of ecosystem services, and its behaviour as either a carbon sink or source is highly vulnerable to climate change. Predicting the distribution quantity and behaviour of peat soils is, therefore, an important contributing factor in the Earth System Models (ESM) used to predict future climate. The part of the ESM used to predict peat is known as a Land Surface Model (LSM).

Land surface models capture the interactions between the land and the atmosphere, modelling the exchange of gases whilst incorporating effects of water, topography and vegetation. However, they are highly empirical with little foundation in the analysis of uncertainty in the underlying physics, and outputs are definitive rather than probabilistic. Uncertainty is determined by running multiple models with different scenarios. They are also inflexible, whereby introducing alternative model configurations to LSMs is extremely time-consuming, often taking many years. They are generally tuned against a very limited range of point measures and ignore large datasets and known peat distributions to inform the models.

This project will exploit recent developments in AI, scientific machine learning and computational statistics to develop improved models for the role of peatlands designed to be incorporated into LSMs. Existing mathematical models which incorporate physical knowledge about peat mechanics and hydrology will be further improved and used as the basis for physics-informed machine-learning techniques which will enable the development of highly accurate, computationally efficient, data-driven surrogate representations of the computationally expensive mechanistic models. All sources of uncertainty will be incorporated in a fully probabilistic manner using methods from Bayesian statistics and Uncertainty Quantification to produce predictions of future states along with honest representations of uncertainty.

Prospective students should have a strong background in mathematical modelling or computational statistics, with strong computational skills and an interest in environmental modelling.

Supervisors: Dr Chris Fallaize (School of Mathematical Sciences), Dr Matteo Icardi (School of Mathematical Sciences), Prof David Large (School of Chemical and Environmental Engineering).

For further details and to arrange an interview please contact Dr Chris Fallaize (School of Mathematical Sciences).

AI-Enhanced Flood Response Modelling: Integrating FCMs, ABM, and VR for Disaster Resilience (Computer Science, Engineering)

Floods represent a critical challenge to human safety and infrastructure resilience. While existing flood models predominantly focus on hydrological and infrastructural aspects, academic research, and in some cases response planning, has largely overlooked human decision-making in this disaster scenario.

Understanding how individuals and communities respond to flood warnings is crucial for developing more effective evacuation strategies and minimising potential loss of life. Fuzzy Cognitive Maps (FCMs), a representation of knowledge in complex systems, offer a structured approach to modelling these intricate socio-environmental interactions. However, traditional FCMs often rely on expert-defined relationships that are subjective, may not fully capture the nuanced realities of human behaviour, and are difficult to scale up for modelling more complex decision scenarios. Recent advancements in AI and machine learning present an opportunity to dynamically refine these relationships, providing a more adaptive and responsive modelling approach.

The aim of this project is to work on novel methods for creating human-in-the-loop flood simulation environments that embed credible dynamically-generated FCMs for defining virtual human behaviour in these simulations. Given the impracticabilities of conducting research in real flood environments, this approach will adopt Virtual Reality (VR) technologies, allowing for the collection of realistic behavioural data.

This PhD offers a unique opportunity to develop cutting-edge AI techniques for understanding and mitigating flood risks, combining advanced computational methods with critical real-world applications. You will be working on adaptive algorithms for real-time FCM weight optimisation, create an agent-based model (ABM) with virtual actors for testing and validating AI-enhanced FCMs, use the ABM to simulate flood scenarios, and implement iterative information exchange between the VR component and the ABM for investigating the impact of crowd behaviour on human-in-the-loop decision making. The expected outcomes for this project are a novel methodology for real-time FCM weight tuning, a framework linking individual human behaviour to group dynamic responses, and an integrated research platform combining VR and agent-based modelling for flood scenario decision support.

Supervisors: Dr Peer-Olaf Siebers (School of Computer Science), Dr Glyn Lawson (Faculty Of Engineering), Dr Riccardo Briganti (Faculty Of Engineering).

For further details and to arrange an interview please contact Dr Peer-Olaf Siebers (School of Computer Science).

Assessing feasibility and suitability of involving social robots within caregiver-child interactions (Psychology, Computer Science)

Social robots have been successfully integrated into customer service roles in offices, museums, hospitals and other public organizations. Ample evidence also points to their role in assisting engagement and education in neurodiverse settings. One related yet under-served area of research is the use of these robots in family and caregiving settings. The research challenge lies in developing social robots capable of more natural and intuitive interaction, which involves investigating not only the appearance and social capabilities of the robot, but also its relational role, autonomy, and social intelligence. The research into bidirectional human interaction, proves critical in this regard, and can allow the robot to mimic natural and realistic social human-robot interaction.

To this end, this project has three broad aims. First, it will query the feasibility and suitability of using social robots in family settings directly from caregivers and their children using a mixture of questionnaire and experimental methods. Here, we will assess how emotional expressivity, movement, vocalisations and verbalisations, and smoothness of the social robot and its transitions between actions and goals can be improved during caregiver-facing and child-facing interactions. Second, we will develop machine-learning methods on an existing, rich dataset of videos from caregivers and children (part of a previously externally-funded project) to extract how caregivers use affect, touch, gestures, object engagement, instructions, language etc. to increase child engagement. This information along with findings from the first aim, will be directly used to improve emotional expressivity in the robot. Finally, we will evaluate whether an evidence-driven approach (from achieving the first and second aims) to equip social robots with required social and emotional intelligence improves human-robot interaction experiences.

This PhD project will benefit from a strong multidisciplinary approach at the interface of Psychology, Robotics and Computer Science. Prospective Ph.D. applicants are expected to be able to design questionnaires, and experiments for adult-robot interactions, and child-robot interactions, and apply machine learning and image processing tools and techniques to videos of interactions. Prior knowledge of machine-learning, natural language processing and robotics is desirable but not essential.

Supervisors: Dr Sobana Wijeakumar (School of Psychology), Dr Nikhil Deshpande (School of Computer Science), Dr Aly Magassouba (School of Compter Science).

For further details and to arrange an interview please contact Dr Sobana Wijeakumar (School of Psychology).

Computer Vision-Based Monitoring of Colic in Horses (Veterinary Medicine & Health Sciences, Computer Science)

We are seeking a dynamic and passionate PhD student to join our team and help drive enhancements to equine health and welfare through AI based monitoring. Through this PhD, you will have the unique opportunity to gain an exceptional skillset through interdisciplinary collaboration between the UoN School of Veterinary Medicine and Science, School of Computer Science, and industry partner Vet Vision AI; helping to shape the future of the animal health and welfare.

Colic is the most common emergency problem in the horse, and a major cause of death. The disease can occur suddenly and unexpectedly when the horse is unobserved and deteriorate quickly. We have an internationally recognised research team who have generated primary research and an evidence-based educational campaign for horse owners and vets on early recognition and response to colic in the horse (REACT now to beat colic). This research has identified that 1 in 5 cases seen by vets are critical (require hospitalisation including surgery or intensive care or are euthanised/die), and has documented the early signs of colic, and the ‘red flag’ indicators of critical cases. The challenge is that many cases occur when the horse is unobserved (e.g. overnight) and deteriorate very quickly. Early recognition and treatment affect the chance of survival, especially for horses with strangulating or dying intestine. There are around 850,000 horses in the UK, and colic represents a major issue across the industry, and a major concern for horse owners.

Our research team, in collaboration with Vet Vision AI (www.vetvisionai.com) is developing computer vision AI algorithms which can monitor horses continuously in their stables, tracking normal behaviours, and identifying the early signs of colic. We are working with key equine hospitals to collect data from horses hospitalised for colic, with a large caseload of both medical and surgical cases. This project will develop and validate the existing algorithms to detect signs of colic using data from these horses.

The PhD student will work in an award-winning colic research team, and an exciting new computer vision team. The collaboration with Vet Vision AI gives access to camera systems, data collected from veterinary hospitals and existing validated algorithms to monitor normal behaviour and early-stage algorithms for colic signs.

The student will gain an advanced understanding of computer vision approaches to measure equine behaviour. These will include skills in training and evaluating computer vision models, augmentation, and synthetic data techniques. Alongside the ability to work with both academic and industry leaders in the fields of both computer vision and veterinary medicine and science, the applicant will have the opportunity to change the face of clinical cases of colic are detected and reported in horses nationally.

Supervisors: Dr Robert Hyde (School of Veterinary Medicine & Health Sciences), Professor Sarah Freeman (School of Veterinary Medicine & Health Sciences), Dr Rachel Clifton (School of Veterinary Medicine & Health Sciences), Professor Andrew French (School of Computer Science) , Dr Valerio Giuffrida (School of Computer Science).

For further details and to arrange an interview please contact Dr Robert Hyde (School of Veterinary Medicine & Health Sciences).

Developing AI tools for Identifying novel antibiotics from complex metabolomics datasets (Chemistry, Pharmacy)

Bacteria and fungi produce a wide range of antibiotics to defeat competitors and pathogens which we have co-opted for use in medicine. It has become clear that there are many undiscovered compounds out there but identifying novel bioactive molecules from the complex metabolomes of these organisms is extremely challenging. This project will characterise the features of known antibiotics in metabolomic datasets and use this to identify compounds with these features for follow up as novel antibiotics. The student will then have the opportunity to work in the laboratory with the O’Neill group to purify and characterise the most promising compounds they have identified for use as novel antibiotics.

This project works with analytical chemistry data and may involve work in a chemistry/biochemistry lab. Students with previous lab experience and knowledge of chemistry, and with a desire to complement this with enhanced skills in data science, may be particularly interested in this project.

Supervisors: Dr Ellis O’Neill (School of Chemistry), Prof. Charles Laughton (School of Pharmacy).

For further details and to arrange an interview please contact Dr Ellis O’Neill (School of Chemistry).

Energy requirements of neuromorphic learning systems (Psychology, Mathematical Sciences)

Very large neural networks are rapidly invading many parts of science, and have yielded some very exciting results. However, particularly the training of large networks requires a lot of energy. This energy is needed to compute, but also to store information in the synaptic connections between neurons. Interestingly, also biological systems require substantial amounts of energy to learn. Under metabolically challenging conditions, these requirements can be so large that in small animals learning reduces the lifespan. Based on these findings we have started to design algorithms that reduce the energy needed to train neural networks.

This project will explore energy requirements for learning in neuromorphics system with memristors. Neuromorphic systems mimic the biological nervous system in their design principles and are currently being explored to create highly energy efficient neural networks. Memristors that are a key technology in such devices. Specifically, we will 1) develop models that describe the energy needs for learning in neuromorphic networks, 2) use inspiration from biology to design efficient algorithms that are more energy efficient and test them in simulations, and 3) contrast energy requirements to the energy needs in biology as well as conventional hardware.

The ideal applicant will have a strong background in physics, mathematics, computer science or engineering with both analytical and programming skills. Interest in biology and/or engineering will be beneficial.

Supervisors: Prof. Mark van Rossum (School of Psychology and School of Mathematical Sciences), Dr Neil Kemp (School of Physics and Astronomy).

For further details and to arrange an interview please contact Prof. Mark van Rossum (School of Psychology and School of Mathematical Sciences).

Experimental and computational neuroscience modelling of artificial spiking neuron networks (Physics and Astronomy, Mathematical Sciences)

This project combines experimental research on artificial spiking neurons made from oxide thin films with computational neuroscience studies on artificial neural networks and spiking neural networks. The successful candidate will have the opportunity to work at the overlap of computer science and physics of oxide electronic devices contributing to the development of next generation neuromorphic technologies.

The study of artificial spiking neurons and neural networks is a rapidly growing field with applications for both fundamental neuroscience and practical A.I. computations. Artificial spiking neurons mimic the behaviour of biological neurons, offering a promising approach to understanding brain function and developing advanced computational systems. By integrating experimental and computational methods, this project aims to bridge the gap between material-based neural realisations and theoretical neural network frameworks, paving the way for innovative solutions in artificial intelligence, neuromorphic computing, and brain-machine interfaces.

Incorporating experimental artificial neurons into spiking neural networks (SNNs) studied by computer scientists is promising in terms of boosting the energy efficiency. However, training complexity, hardware integration, long-term and short-term memory implementations, biological plausibility, and data integration are still remaining issues.

In this project, you will fabricate and characterise artificial spiking neurons using silicon dioxide and niobium oxide films, characterise the material and electric properties, connect these neurons to form primitive neural networks, realise spiking behaviour in individual neurons and in the network, perform simulations to model the behaviour of the neural networks, integrate experimental data into artificial neural network and spiking neural network models.

Supervisors: Dr Neil Kemp (School of Physics and Astronomy), Prof Stephen Coombes (School of Mathematical Sciences).

For further details and to arrange an interview please contact Dr Neil Kemp (School of Physics and Astronomy).

Human-AI collaboration for medical data mapping using Large-Language Models recommendations, decision explanations and feedback (Medicine & Health Sciences, Computer Science, Engineering)

Converting medical terms into a standard format using the OMOP Common Data Model (CDM) is essential for making healthcare data easier to find, share, and use in research. However, different healthcare systems often use medical standards inconsistently, making it difficult to compare and analyse data effectively. Standardising data is crucial for ensuring reliable research and medical insights, but the process is complex and requires input from healthcare professionals, data engineers, and software developers. Tools have been developed to help with this process, but manual effort is still needed to check and approve data mappings.

Recently, Large Language Models (LLMs), like OpenAI’s ChatGPT, have been explored as an alternative to improve the accuracy of medical term conversion. These models can provide more meaningful suggestions and reduce manual effort. However, using AI in healthcare raises concerns about data privacy, security, and compliance with regulations like GDPR, particularly when handling sensitive patient information. Ensuring that AI tools are used safely and responsibly remains a key challenge.

This project will use Large-Language Model (LLM) as a recommendation system, with the human in the loop, to improve the efficiency and accuracy of OMOP mapping. Instead of full automate this process, we will use LLM to make recommendations, which will then be visualised, checked and refined by the users. The system will also learn user preferences through feedback over time and ensure data privacy and ethics. Those preferences will be used to refine recommendations and keep track of data mapping provenance.

The ideal candidate should have strong foundation in mathematics and machine learning, software engineering and desirable experience developing LLM-based tools.

Supervisors: Dr Kai Xu (School of Computer Science), Dr Grazziela Figueredo (Faculty of Medicine & Health Sciences and School of Computer Science), Prof. Philip Quinlan (Faculty of Engineering), Dr Tim Beck (Faculty of Medicine & Health Sciences).

For further details and to arrange an interview please contact Dr Grazziela Figueredo (Faculty of Medicine & Health Sciences and School of Computer Science).

Imaging molecules in action: from atoms to energy materials (Chemistry, Computer Science)

Over 300 years scientists have devised many ways to illustrate atoms and molecules and their behaviour, using everything from elemental symbols to sophisticated 3D models. It’s easy to think these representations are reality, but how we picture molecules is usually based on bulk measurements where information is averaged over billions of billions of molecules (spectroscopy) or reciprocal space (diffractometry). Recently, electron microscopy began revolutionising the way we see matter and allowed us to glimpse at atomically resolved images of individual molecules. Early breakthroughs are exciting, but they raised three key questions:

• What does it mean to ‘see a molecule’?

• How can we reconstruct the 3D shape of a molecule from 2D micrographs?

• How can we achieve resolution in space and time for tracking the movement of individual molecules?

In this project, we aim to answer fundamental questions related to electron microscopy by advancing image analysis methods. We plan to make a significant step-change by applying advanced image analysis methods (Xin Chen) to problems related to single-molecule imaging (Andrei Khlobystov). While sub-Angstrom resolution can be achieved routinely, there's a pressing need for robust techniques to denoise electron microscopy images that allow comparing experimental and theoretically simulated images. For example, image denoising using a diffusion probabilistic model has the potential to identify atom positions while simultaneously enhancing the temporal resolution of electron microscopy. This breakthrough in spatiotemporal continuity of atomic imaging could allow us to watch molecules in action and film chemical reactions with atomic resolution in real-time.

The Nanoscale & Microscale Research Centre at Nottingham, along with leading European centres in Ulm, Germany and Diamond Light Source in the UK, will provide training for the student to image molecules with atomic resolution. In addition, the student will learn to develop modern artificial intelligence algorithms for image processing and analysis to enhance the data to the highest level of spatiotemporal resolution.

The project aims to revolutionize the imaging of molecules, turning it into a valuable tool for discovering new chemical processes. This will be particularly useful in catalysis and net-zero technologies, which are crucial for 70% of the UK chemical industry. The University of Nottingham holds the largest EPSRC grant in this area (EP/V000055/1).

Supervisors: Prof Andrei Khlobystov (School of Chemistry), Dr Xin Chen (School of Computer Science)

For further details and to arrange an interview please contact Prof Andrei Khlobystov (School of Chemistry).

Improving reliability of medical processes using system modelling and AI techniques (Engineering, Computer Science)

Adverse events and preventable failures in healthcare services represent a key area of patient safety that can be improved with the use of computer vision approaches to system analysis. For many clinical procedures there can be multiple deviations in service delivery, which influences process reliability, efficiency of usage of hospital resources and risk to staff and patient safety. Hence, having a model that integrates these various aspects of procedure is essential to result in a more accurate analysis of optimizing the service performance. Computer vision approaches such as ones for object identification and action recognition can help to automatically identify deviations in both technical and potentially non-technical skills of medical staff, such as poor team dynamics, problems with communication and a lack of leadership. This automated obtained data can then be fed into the models that are used to evaluate a range of deviations from guidelines including their interactions and effects on procedure outcomes and used to support operational and strategic decisions. This approach is expected to provide a more convenient and efficient way of healthcare service performance data acquisition and analysis in the future. However, undoubtedly, the usage of such approaches can only become successful if patients are placed in the heart of technology usage, which is designed with patients’ perspective in mind.

Proposed project:

This project would investigate commonly observed deviations in healthcare service and opportunities for identification of such deviations using computer vision approaches. It will demonstrate how deviation data can be used in computer-based simulation models, which are used to evaluate effects of deviations and to support decisions. It will focus on investigating how deviation data can be used in real time decision-making process, how accepting patients and staff are for such technologies and what integral role they should play in evaluating and ensuring an uptake of such technologies. The proposed method will be potentially applied to processes carried out in an operating theatre.

Benefits of joining this project:

This project will give an opportunity for the student to explore the area of healthcare process modelling with a holistic perspective considering various elements in the process. The integration of computer vision techniques in this project will also enhance the value of this work. The students will not only gain an experience and insight on healthcare service characteristics but also a skill in one of the most attractive areas of technology nowadays.

Supervisors: Dr Rasa Remenyte-Prescott (Faculty Of Engineering), Dr Joy Egede (School of Computer Science).

For further details and to arrange an interview please contact Dr Rasa Remenyte-Prescott (Faculty Of Engineering).

Intelligent Vehicle-2-Grid Integration: A Novel Approach to Community-Scale Energy Storage (Engineering, Computer Science)

As the growth of renewable energy continues to accelerate, there is a need for large scale energy storage to effectively integrate these intermittent supplies into our electricity networks. Batteries are an important technology to help address this need, however, stationary batteries have a high initial cost, limited scalability, and fixed capacity. The parallel growth of electric vehicles provides an opportunity to utilise their collective battery capacity to develop a flexible, expandable, distributed energy storage network that maximises local renewable energy utilisation while minimising infrastructure investments.

This project will explore the use of artificial intelligence to optimise the use Vehicle-to-Grid (V2G) technology to create collective batteries from the capacity of electric vehicles. The aim is to develop an AI framework to predict and synchronise key elements within a community energy system including renewable energy generation, community energy consumption, EV availability, and aggregated storage capacity. The resulting system will enhance grid resilience, accelerate renewable integration, and create a participatory energy model where community members actively contribute to sustainability goals while potentially generating revenue through grid services.

The ideal candidate will have a passion for sustainability, a strong aptitude for data analysis, and a background in machine learning or artificial intelligence. Successful applicants will benefit from exposure to previous community energy projects and previous work on the application of cutting-edge machine learning technology in related domains.

Supervisors: Dr Rob Shipman (Faculty of Engineering), Prof. Rong Qu (School of Computer Science).

For further details and to arrange an interview please contact Dr Rob Shipman (Faculty of Engineering).

Investigating the capacity, impact and governance of Generative AI use in sourcing and procurement: A multi-case study analysis (Business, Computer Science)

This project will conduct rigorous empirical research investigating the capacity, impact and governance of using generative artificial intelligence (AI) models in procurement and sourcing - key functions that are heavily scrutinised within every organisation to ensure organisational compliance with evolving supply chain regulations. The project will focus specifically on how generative AI, including large language models and deep learning algorithms, may be designed and applied to optimise sourcing processes, decisions and assessment, including the potential this has for increasing operational efficiency and optimising supplier selection, contracts management, cost analysis and risk management. At the same time, it identifies the potential unintended negative consequences of this use and explores the role that governance can play in preventing or mitigating for them. The research will collate empirical data using multiple case studies of external organisations in different sectors at different stages of generative AI adoption. Analysis of the data will: (1) identify the type of generative AI models used, application area and associated performance and cost implications, (2) identify the intended and unintended consequences associated with generative AI adoption in sourcing decision-making, including governance risks and ethical concerns, (3) analyse regulatory compliance requirements and organisational policies governing AI -assisted sourcing operations, (4) investigate enterprise-level governance structures and risk control mechanisms, mitigating AI-driven biases and uncertainties, (5) develop novel methodologies for identifying and assessing the operational, social, and ethical implications of using AI-driven systems in sourcing and procurement, and (6) propose governance models and other responsibility mechanisms to mitigate biases, address other unintended negative consequences and ensure compliance with legal and ethical standards.

Please note that this opportunity will be for PhD by papers. Applicants will need to have some familiarity with generative AI architectures and strong analytical skills to proficiently synthesise qualitative data and model governance structures. Prior knowledge of supply chain management and procurement is preferable.

Supervisors: Dr Wafaa Ahmed (Business School), Dr Helena Webb (School of Computer Science).

For further details and to arrange an interview please contact Dr Wafaa Ahmed (Business School).

Large language model-aided ontology-based knowledge modelling in built environment contract management (Engineering, Computer Science)

Large Language Models (LLMs) have demonstrated significant capabilities in processing and generating natural language. In the built environment context, contractual information management is a critical yet highly intricate domain, often characterised by fragmented documentation, ambiguous terminology, and multi-party interactions. Approaches, purely based on LLMs, to managing contractual information struggle with consistency, retrieval efficiency, and interpretability. An ontology-based knowledge modelling approach can provide a structured and semantically enriched framework to enhance the application of LLMs in this domain. A purely LLM-based approach struggles with handling inconsistency in interpreting complex legal language, susceptibility to hallucination, difficulty in retrieving precise contractual clauses, and integrating unstructured textual data into a structured decision-making process.

This PhD study leverages ontology-based methods to formalise the representation of contractual knowledge, providing a structured, visualisable, semantically enriched, and interpretable structure that can be integrated with LLMs to facilitate more accurate and consistent reasoning, query handling and automated document analysis. By integrating the general-purpose legal ontologies (e.g., LKIF Core, EGODO, etc.) with the built environment domain-specific ontologies (e.g., IFC, e-COGNOS, etc.) for contractual information in the built environment, we aim to improve LLMs' contextual understanding and application, allowing for more accurate and relevant text generation, clause interpretation, and risk assessment for building and infrastructure projects. The retrieval-augmented generation (RAG) techniques are incorporated to further enhance LLM performance, enabling models to dynamically retrieve relevant external information before generating responses and improving contract analysis's precision, interpretability, and reliability, reducing hallucination effects and enhancing trust in AI-generated outputs.

This project is aligned with ongoing research initiatives in digital construction and AI-driven knowledge management, benefiting from interdisciplinary collaboration between engineering and computer science experts. The studentship will leverage various resources from an industrial partner (a UK-based blockchain research and engineering company) (e.g., access to resources, including domain-specific datasets and computing infrastructure) to support the research.

The ideal candidate should have a strong foundation in mathematics and machine learning theories and considerable experience developing LLM-based tools.

Supervisors: Dr Zigeng Fang (Faculty of Engineering), Dr Kai Xu (School of Computer Science).

For further details and to arrange an interview please contact Dr Zigeng Fang (Faculty of Engineering).

Multimodal AI-powered Large Brain Model (LBM) for Brain Tumour Detection and NLP enhanced Medical Report Generation (Computer Science, Medicine & Health Sciences)

The detection and characterisation of brain tumours are critical challenges in modern healthcare, demanding precision, efficiency, and interpretability. Traditional diagnostic workflows rely heavily on radiologists’ expertise to analyse imaging modalities such as Magnetic Resonance Imaging (MRI) and Computed Tomography (CT) scans. These methods, while effective, are time consuming, subject to waiting times and prone to variability due to human interpretation. Moreover, the process of documenting findings and generating comprehensive medical reports represents a major proportion of the time taken to deliver the imagingdiagnosis. Recent advancements in Artificial Intelligence (AI) present an opportunity to revolutionise this domain by automating tumour detection and report generation.

This project proposes the development of a multimodal non-binary classification AI-powered Large Brain Model (LBM) to address these challenges. The Large Brain Model (LBM) is a multi modal non-binary classification AI system which will integrate multiple data sources for the accurate classification of brain tumours and standardised medical report generation through Natural Language Processing (NLP) and sentiment analysis. The system seeks to accelerate reporting turnaround and increase reproducibility whilst achieving expert level accuracy in imaging diagnosis to ultimately improve patient outcomes. The primary aim is to create a unified framework that integrates multimodal imaging data with state-of-the-art AI techniques for brain tumour detection alongside sentiment analysis for automated medical report generation. By leveraging deep learning architectures for image segmentation and non-binary classification, combined with transformer-based sentiment analysis, the LBM system aims deliver rapid, highly accurate reports with regards to disease extent and characteristics. The innovation of this project lies in its objective reporting approach, which fuses data from diverse imaging sources (FLAIR, T1-weighted, T2-weighted and T1CE) to provide a rapid, quantitative and standardized report. Advanced techniques, such as 3D convolutional neural networks (CNNs) and U-Net [1] variants, are going to be employed for tumour localization and non-binary classification. Simultaneously, the NLP component will synthesise imaging findings into correct and clinically impactful reports, enhancing communication between radiologists and referrers. This study aims as economically attractive workflow acceleration and report standardization in the favour of BT-BRADS (https://btrads.com) framework, where currently reports are variable and, in most cases qualitative.

This project forms a collaboration with the UoN’s School of Medicine and Precision Imaging Centre (https://www.nottingham.ac.uk/research/beacons-of-excellence/precision imaging/index.aspx). We will use standard medical multimodal brain tumor image segmentation benchmark e.g. BRATS [2] data, further public dataset and anonymized NHS imaging data together with the SOTA of imaging facilities which enhance this analysis and predication. Finally, a new 3D visualization system will be developed to form an interaction between automated report generated and human radiologist evaluation.

Supervisors: Dr Armaghan Moemeni (School of Computer Science), Dr Steffi Thust (School of Medicine & Health Sciences).

For further details and to arrange an interview please contact Dr Armaghan Moemeni (School of Computer Science).

Uncertainty quantification for machine learning models of chemical reactivity (Chemistry, Mathematical Science)

In this PhD project, we will develop and implement approaches for estimating the uncertainty in AI predictions of chemical reactivity, to help strengthen the interaction between human chemists and machine learning algorithms and to assess when AI predictions are likely to be correct and when, for example, first principles quantum chemical calculations might be helpful.

Predicting chemical reactivity is, in general, a challenging problem and one for which there is relatively little data, because experimental chemistry takes time and is expensive. Within our research group, we have a highly automated workflow for high-level quantum chemical calculations and we have generated thousands of examples relating to the reactivity of molecules for a specific chemical reaction. This project will evaluate a variety of machine learning algorithms trained on these data and, most crucially, will develop and implement techniques for computing the uncertainty in the prediction.

The algorithms developed in the project will be implemented in our ai4green electronic lab notebook, which is available as a web-based application: http://ai4green.app and which is the focus of a major ongoing project supported by the Royal Academy of Engineering. The results of the project will help chemists to make molecules in a greener and more sustainable fashion, by identifying routes with fewer steps or routes involving more benign reagents.

Applicants should have, or expected to achieve, at least a 2:1 Honours degree (or equivalent if from other countries) in Chemistry or Mathematics or a related subject. A MChem/MSc-4-year integrated Masters, a BSc + MSc or a BSc with substantial research experience will be highly advantageous. Experience in computer programming will be essential.

Supervisors: Prof. Jonathan Hirst (School of Chemistry), Prof. Simon Preston (School of Mathematical Science).

For further details and to arrange an interview please contact Prof. Jonathan Hirst (School of Chemistry).

Using facial and vocal tract dynamics to improve speech comprehension in noise (Psychology, Computer Science)

Around 18 million individuals in the UK are estimated to have hearing loss, including over half of the population aged 55 or more. Hearing aids are the most common intervention for hearing loss, however, one in five people who should wear hearing aids do not and a failure to comprehend speech in noisy situations is one of the most common complaints of hearing aid users. Difficulties with communication negatively impact quality of life and can lead to social isolation, depression and problems with maintaining employment.

Clearly, there is a growing need to make hearing aids more attractive and one route to achieve this is to enable users to better understand speech in noisy situations. Facial speech offers a previously untapped source of information which is immune from auditory noise. Importantly, auditory includes sounds that are spectrally like the target voice, such as competing voices, which are particularly challenging for noise reduction algorithms currently employed in hearing aids. With multimodal hearing aids, which capture a speaker’s face using a video camera, already in development, it is now vital that we establish how to use facial speech information to augment hearing aid function.

What you will do:

This PhD project offers the opportunity to explore how the face and voice are linked to a common source (the vocal tract) with the aim of predicting speech from the face alone or combined with noisy audio speech. You will work with a state-of-the-art multimodal speech dataset, recorded in Nottingham, in which facial video and voice audio have been recorded simultaneously with real-time magnetic resonance imaging of the vocal tract (for examples, see (https://doi.org/10.1073/pnas.2006192117). You will use a variety of analytical methods including principal components analysis and machine-learning to model the covariation of the face, voice and vocal tract during speech.

Who would be suitable for this project?

This project would equally suit a computational student with an interest in applied research or a psychology/neuroscience student with an interest in developing skills in programming, sophisticated analysis and AI. You should have a degree in Psychology, Neuroscience, Computer Science, Maths, Physics or a related area. You should have experience of programming and a strong interest in speech and machine learning.

Supervisors: Dr Chris Scholes (School of Psychology), Dr Joy Egede (School of Computer Science), Prof Alan Johnston (School of Psychology).

For further details and to arrange an interview please contact Dr Chris Scholes (School of Psychology).

Vision and Language Foundational Models for Plant Science (Computer Science, Bioscience)

Plant science research is increasingly generating large and complex datasets. Despite advancements in high-throughput phenotyping, remote sensing, and precision agriculture, effectively integrating and analysing these diverse data sources remains a major bottleneck. Many plant scientists lack the specialised artificial intelligence (AI) expertise required to harness the full potential of modern machine learning techniques. This PhD project aims to bridge this gap by developing novel AI-driven methodologies that support the entire research lifecycle—from literature review and hypothesis formation to large-scale data analysis.

The focus is to develop specialised Vision-Language Models (VLMs) and Large Multimodal Models (LMMs) designed specifically for plant science. These models will facilitate seamless integration and interpretation of heterogeneous datasets, enabling more streamlined research. A key objective is to design AI workflows that generate reproducible and scalable data analysis pipelines, optimising research efficiency while ensuring transparency and accessibility.

To achieve this, another focus of this project will be to develoop and train a dedicated plant foundational model tailored to plant data analysis, forming the backbone of our framework. The VLMs/ LMMs will serve as the front-end of an AI-powered agent, capable of understanding the needs of plant biologists and executing complex, data-intensive analyses through a unified foundational model.

By tackling challenges such as multimodal data integration, AI-assisted scientific communication, and automated experimental design, this project aims to revolutionise plant research. The outcomes will accelerate scientific discovery in plant biology, while also fostering interdisciplinary collaboration by making cutting-edge AI tools more accessible to researchers from diverse backgrounds.

We welcome applicants with a strong background in machine learning, computer vision, and/or artificial intelligence, as well as an interest in applying these techniques to biological research. Experience with scientific programming in Python and familiarity with deep learning frameworks such as PyTorch is highly beneficial. Prior knowledge of plant science is not required, but an eagerness to work at the intersection of AI and life sciences is essential.

Supervisors: Dr Valerio Giuffrida (School of Computer Science), Dr Darren Wells (School of Bioscience), Dr Jonathan Atkinson (School of Bioscience).

For further details and to arrange an interview please contact Dr Valerio Giuffrida (School of Computer Science).

WormAI: Next-Generation AI for Dynamic and Integrated Nematode Phenotyping (Pharmacy, Physics & Astronomy)

Nematodes are among the most completely‐understood animals on the planet, yet their phenotypic and behavioural intricacies remain largely untapped. Despite detailed insights into their genetics and development, the effects of pharmaceuticals, genetic variability and species interactions are still elusive. This PhD project will demonstrate that by uniting synthetic image generation, refined object detection, and robust regression analysis into a transformative platform, these limitations can be overcome. Foundational AI development will occur in Year 1 (Year 1), followed by capturing dynamic behavioural changes under pharmaceutical exposure (Year 2), and culminating in the characterisation of genetic variability and interspecies interactions (Year 3).

The student will acquire skills in machine learning and image processing, ultimately becoming an expert in automated phenotypic analysis and computational biology. By merging deep generative models with advanced detection networks, transfer learning and regression techniques, our approach promises a scalable and precise system that transcends traditional manual analysis and opens exciting new avenues in automated computational biology.

Supervisors: Dr Veeren Chauhan (School of Pharmacy), Dr Maggie Lieu (School of Physics & Astronomy)

For further details and to arrange an interview please contact Dr Veeren Chauhan (School of Pharmacy).

Further information

For further enquiries, please contact Professor Ender Özcan - School of Computer Science